For the second benchmark I am going to explore the performance of .NET compilation and benchmark performance using an Avalonia ’s code base. As I wrote in this previous post I’m doing a series of benchmarks of .NET and JVM on Apple Silicon. While there are impressive native benchmarks the fact it will be some time before the .NET runtime has native support. I have to factor in the potential hit and problems with Rosetta. How much of a performance hit is there and will it be enough that applications targeting it will have problems? All code and results are published here .

Avalonia is a great platform for writing cross platform UI apps under .NET that target Windows, Mac, and Linux. While they didn’t have a DopeTest benchmark like Uno I was interested to see the compilation performance as well as to run their internal suite of benchmarks. These benchmarks are not rendering benchmarks. They are testing the performance of the underlying code though so can be indicative of the performance of .NET under Rosetta.

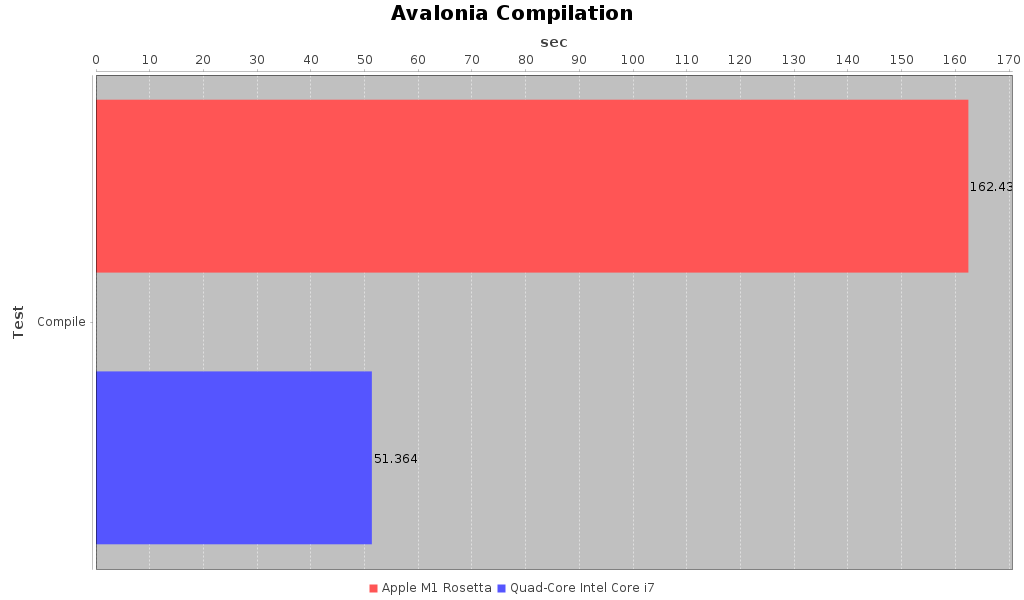

First lets look at the compilation timings, full results with tabular data found here .

Avalonia Compilation and Summary

As we can see there is a huge compilation performance hit from running this under Rosetta on M1. It is over 3x slower than the Intel MBP. The comparable slowdown for the Java compilation test was 1.7x slower, not great but not as bad. Both of the test libraries, Avalonia here and Orekit under Java, have a lot of files that are being processed and a deep package hierarchy. I can’t say for sure they are one hundred percent comparable but they are on the same order of magnitude in complexity. How did the benchmark tests compare (full results with tabular data found here ?

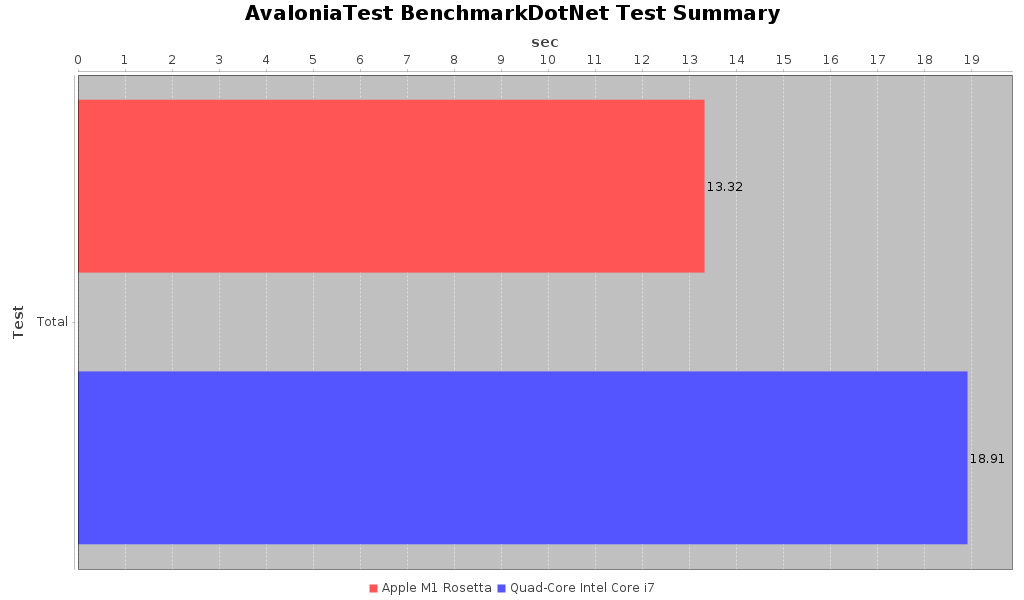

Avalonia Benchmark Summary, summing all of the individual test times across all the tests

This summary chart adds up all of the individual execution times of every test iteration that was run across all the tests. It’s not the pure performance of the tests themselves (which I’ll share in a moment), but it scales similarly. Interestingly the Rosetta benchmark tests completed in 70% of the time it took for the Intel tests to complete. The total run time of the driver programs themselves was even slightly faster than that: 647.53 seconds under Rosetta on M1 versus 991.27 seconds on Intel. That points to some good runtime performance in aggregate. The actual tests themselves though are a bit more of a mixed bag. Some tests are much faster while others are much slower. Only one as extreme as the compilation time: FindCommonVisualAncestor which is 2x slower under Rosetta than on Intel. That one is sounds pretty central to rendering so it may end up being called frequently. As much as I’ve implemented Avalonia code I have never delved into the internals.

Overall it looks like compilation speeds may be a big drag under Rosetta on .NET, for reasons I have not ascertained yet. Runtime performance under Rosetta though has some probability of being between 30 percent faster to 30 percent slower in most operations. There are a few potential operations which can see performance impacted by a factor of two hewever. It will be good to look at the results of the .NET official benchmark suite, which will also lead us to the one potentially big problem with the story of .NET under Rosetta

2020-11-24

in

2020-11-24

in

3 min read

3 min read